PHP Website Crawler Tutorials for February, 2026

Whether you are looking to obtain data from a website, track changes on the internet, or use a website API, website crawlers are a great way to get the data you need.

While they have many components, crawlers fundamentally use a simple process: download the raw data, process and extract it, and, if desired, store the data in a file or database. There are many ways to do this, and many languages you can build your spider or crawler in.

If you’re just getting started, use this tutorial on simply downloading webpages using PHP.

If you’re curious about the legality of web crawlers, we wrote an article summarizing some of the US web crawling cases?

Looking for some quick code to make your development life a bit easier? Try this article on PHP web crawler development techniques we use here at Potent Pages.

PHP Web Crawler Tutorials

Downloading a Webpage Using Selenium & PHP

Wondering how to control Chrome using PHP? Want to extract all of the visible text from a webpage? In this tutorial, we use Selenium and PHP to do this!

Downloading a Webpage Using PHP and cURL

Looking to automatically download webpages? Here’s how to download a page using PHP and cURL.

Quick PHP Web Crawler Techniques

Looking to have your web crawler do something specific? Try this page. We have some code that we regularly use for PHP web crawler development, including extracting images, links, and JSON from HTML documents.

Creating a Simple PHP Web Crawler

Looking to download a site or multiple webpages? Interested in examining all of the titles and descriptions for a site? We created a quick tutorial on building a script to do this in PHP. Learn how to download webpages and follow links to download an entire website.

Creating a Polite PHP Web Crawler: Checking robots.txt

In this tutorial, we create a PHP website spider that uses the robots.txt file to know which pages we’re allowed to download. We continue from our previous tutorials to create a robust web spider and expand on it to check for download crawling permissions.

Getting Blocked? Use a Free Proxy

If you’re tired of getting blocked when using your web crawlers, we recommend using a free proxy. In this article, we go over what proxies are, how to use them, and where to find free ones.

Other PHP Web Crawler Tutorials from Around the Web

PHP Simple Web Crawler

This is a very simple web crawler that uses pure PHP, the curl PHP extension, and the DOM extension to extract movie information from IMDB.

Web Scraping with PHP

This tutorial goes over several methods of crawling sites using PHP, including sockets, curl, Guzzle, and extraction using the PHP DOM parser and with regular expressions.

Web Scraping with PHP – How to Crawl Web Pages Using Open Source Tools

This set of tutorials by Manthan Koolwal shows how to download webpages in PHP using Guzzle, Goutte, and the headless browser Symfony Panther.

Web Scraping with PHP: a Step-By-Step Tutorial

This tutorial shows how to download webpages using the cURL library and to parse them using the Simple HTML DOM library.

How To Create A Simple Web Crawler in PHP

This tutorial covers how to create a simple web crawler using PHP to download and extract from HTML. It was written by Subin Siby. This also includes a demo about the process and uses the Simple HTML DOM class for easier page processing.

How to Create a Web Spy with a PHP Crawler

This is a tutorial made by 1st Web Designer on how to create a web crawler in PHP in 5 steps. The tutorial explains how to create a MySQL database, how to obtain data, and how to save it.

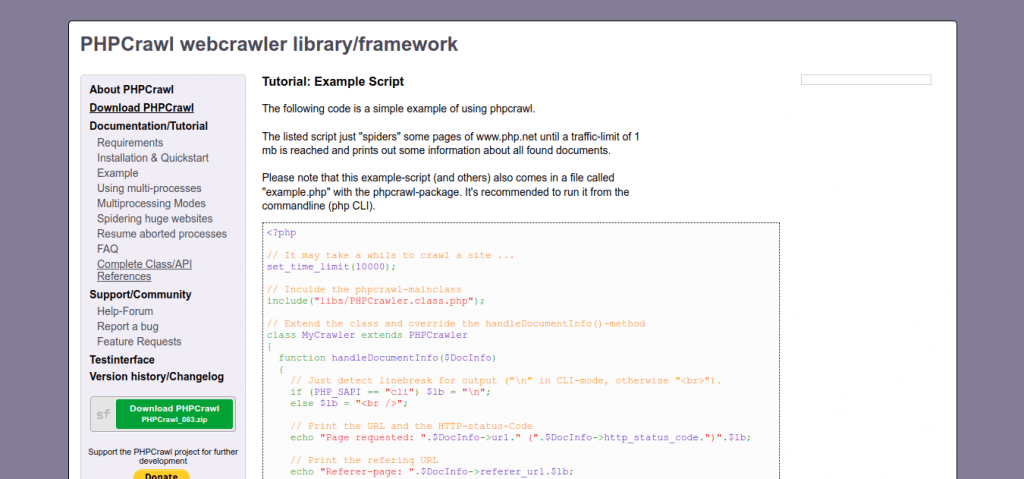

PHPCrawl webcrawler library for PHP – Example script

This is a tutorial published on the PHPCrawl website about building a crawler in PHP using the PHPCrawl library. This provides a brief explanation and a sample script to demonstrate how to implement the library.

PHP Tutorial: Making a Webcrawler

This is a PHP tutorial made by Tim van Osch about building a web crawler using PHP. This include codes in setting up a web server with the required MySQL database, and how to use the base PHP file to build a functional crawler.